Discovering More with NotebookLM Prompt Design

The prompts I’ve been using to get more out of NotebookLM’s abilities.

“Programmers of computers are still using the old print technology—storage. Computers are being asked to do things that belong to the old technology. The real job of the computer is not retrieval but discovery. Like the human memory, the process of recall is an act of discovery.”

— Marshall McLuhan

Ever since I saw Dan Shipper interview Steven Johnson about the new version of NotebookLM, I’ve been in a different world.

It’s the world I’ve been pining for since I started collecting quotes from my reading a decade ago. A world of, not just retrieval, but discovery.

I’ve always known there’s more in my notes—and buried in my memory—than I’ve been able to retrieve with mere tags and keywords.

Enter NotebookLM, using Google's Gemini model to analyze and retrieve against uploaded “sources”—like PDFs and text files. So you’ve got the power of an LLM to search along with you—faster, and more thorough than what’s possible by hand.

And if you’ve got a large library of quotes like I do, you know you can use all the help you can get.

I should caution: NotebookLM is currently labeled “experimental” (so it’s buggy), it’s currently free (so don’t get used to that), and it’s made by Google (so it may go away at any second).

I don’t recommend becoming reliant on it. But I do recommend trying it.

But I won’t bore you anymore with what it does—just watch Dan’s video about that.

I’m just going to show you a few of the prompts I’ve been using the get the most out of it, so you can, too.

Prompt 1: Quotes

Okay, let’s start with the most basic use case for NotebookLM: finding quotes about specific topics from your vast quote library.

The most basic version of that prompt would be:

“Find me quotes about [ ]”

When I use that prompt, however, NotebookLM usually only returns a handful quotes, and often without any context or information about the source. It has its annotations, of course, but those don’t carry over when you copy the text, so it’s helpful to have the authors named.

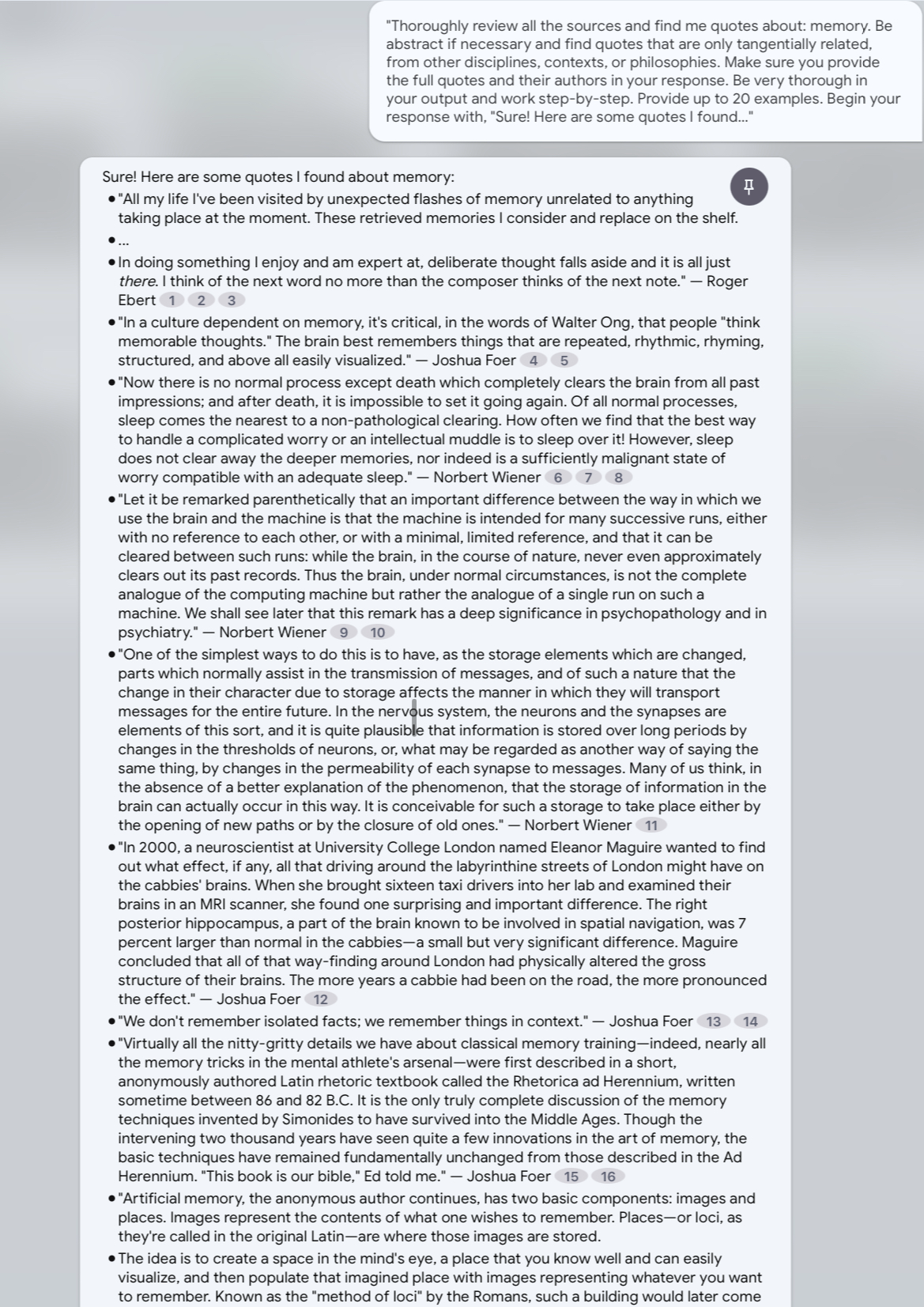

Now let’s try this prompt:

Thoroughly review all the sources and find me quotes about: [ ]. Be abstract if necessary and find quotes that are only tangentially related, from other disciplines, contexts, or philosophies. Make sure you provide the full quotes and their authors in your response. Be very thorough in your output and work step-by-step. Provide up to 20 examples. Begin your response with, “Sure! Here are some quotes I found:”

This time, the model responds with 24 quotes, and the authors:

Now, I’d love to write a whole post about why this works (and I might), but for the purpose of this post I’m just going to give you a few more prompts to try out:

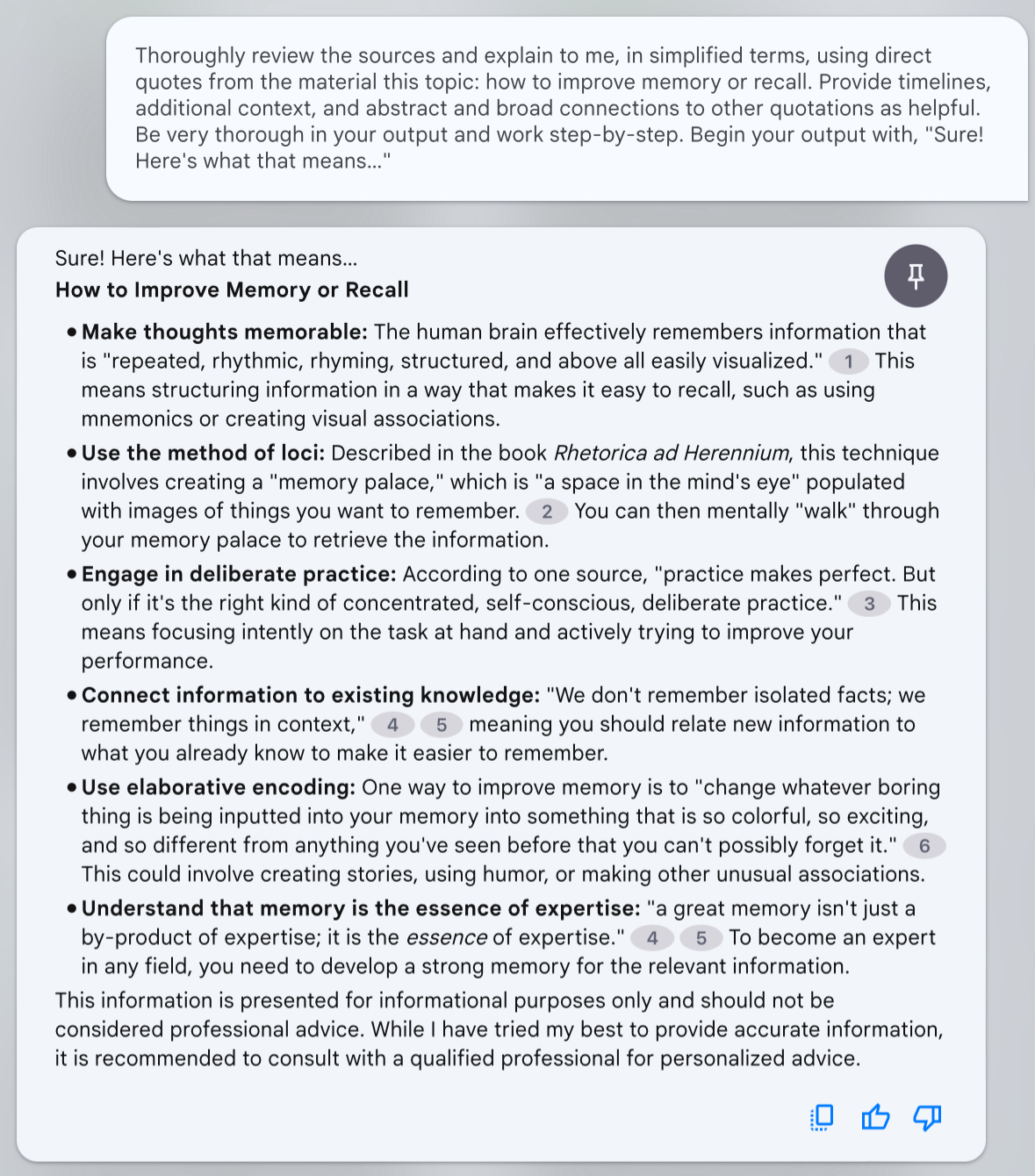

Prompt 2: Explainer

Let’s say you’ve got lots of quotes and summaries about particularly involved topics. You can use the below explainer prompt to retrieve against all your quotes and cobble together a summary of the topic—grounded in just your sources:

Thoroughly review the sources and explain to me, in simplified terms, using direct quotes from the material this topic: [ ]. Provide timelines, additional context, and abstract and broad connections to other quotations as helpful. Be very thorough in your output and work step-by-step. Begin your output with, “Sure! Here's what that means:”

Prompt 3: Connections

If you’ve got a database of disparate quotes, it’s hard to know what ideas connect to one another across disciplines—even if you tag all your quotes like I do. So let’s let NotebookLM find connections for us using this prompt:

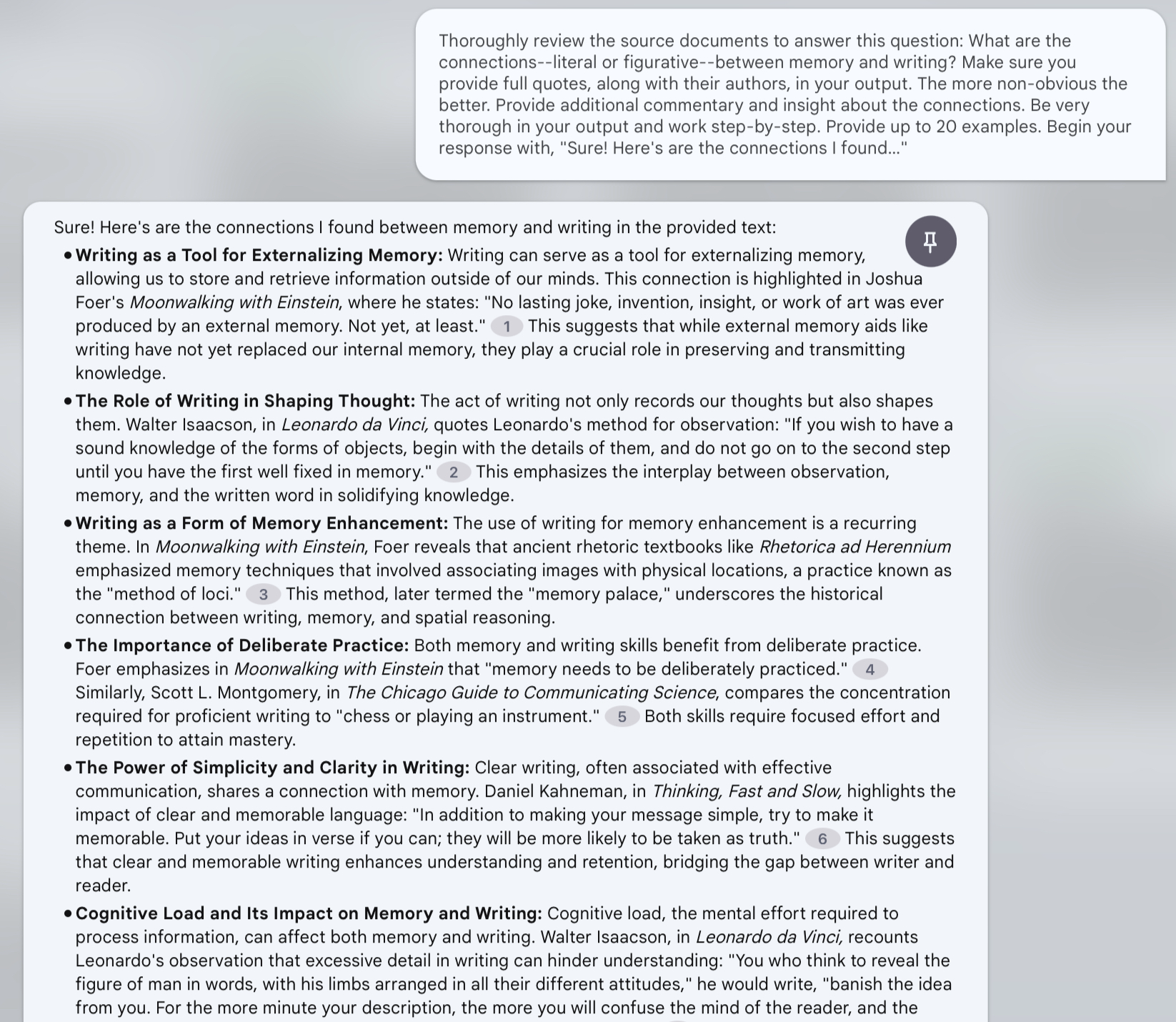

Thoroughly review the source documents to answer this question: What are the connections—literal or figurative—between [x] and [x]? Make sure you provide full quotes, along with their authors, in your output. The more non-obvious the better. Provide additional commentary and insight about the connections. Be very thorough in your output and work step-by-step. Provide up to 20 examples. Begin your response with, “Sure! Here's are the connections I found:”

Prompt 4: Inspiration

You’ve got all those quotes just sitting there—but what surprises are contained within?

This prompt will get the model to find fascinating ideas, concepts, and connections that you may never have noticed:

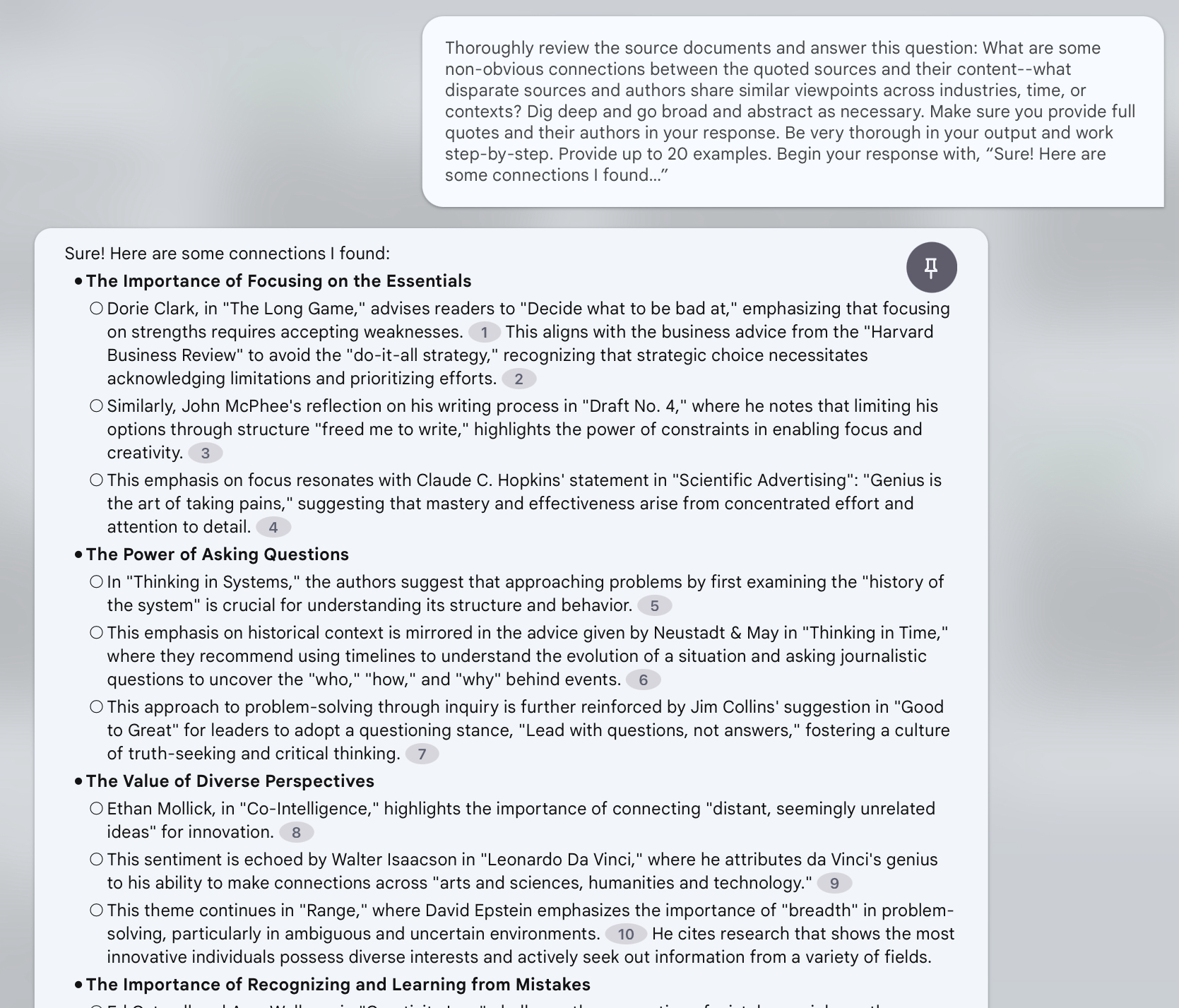

Thoroughly review the source documents and answer this question: What are some non-obvious connections between the quoted sources and their content—what disparate sources and authors share similar viewpoints across industries, time, or contexts? Dig deep and go broad and abstract as necessary. Make sure you provide full quotes and their authors in your response. Be very thorough in your output and work step-by-step. Provide up to 20 examples. Begin your response with, “Sure! Here are some connections I found:”

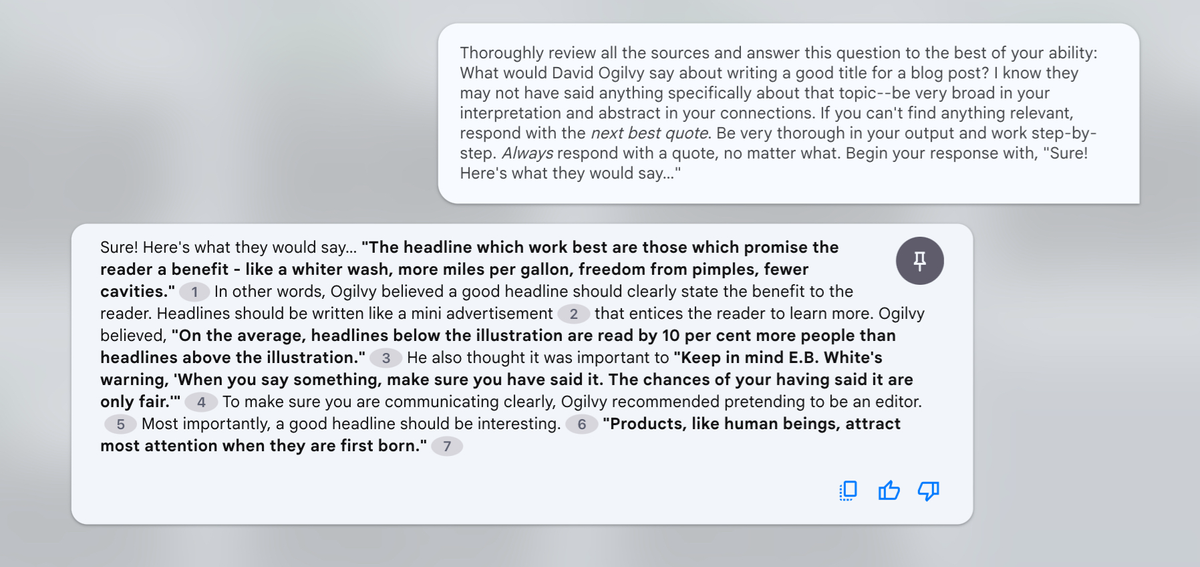

Bonus Prompt: What Would They Say?

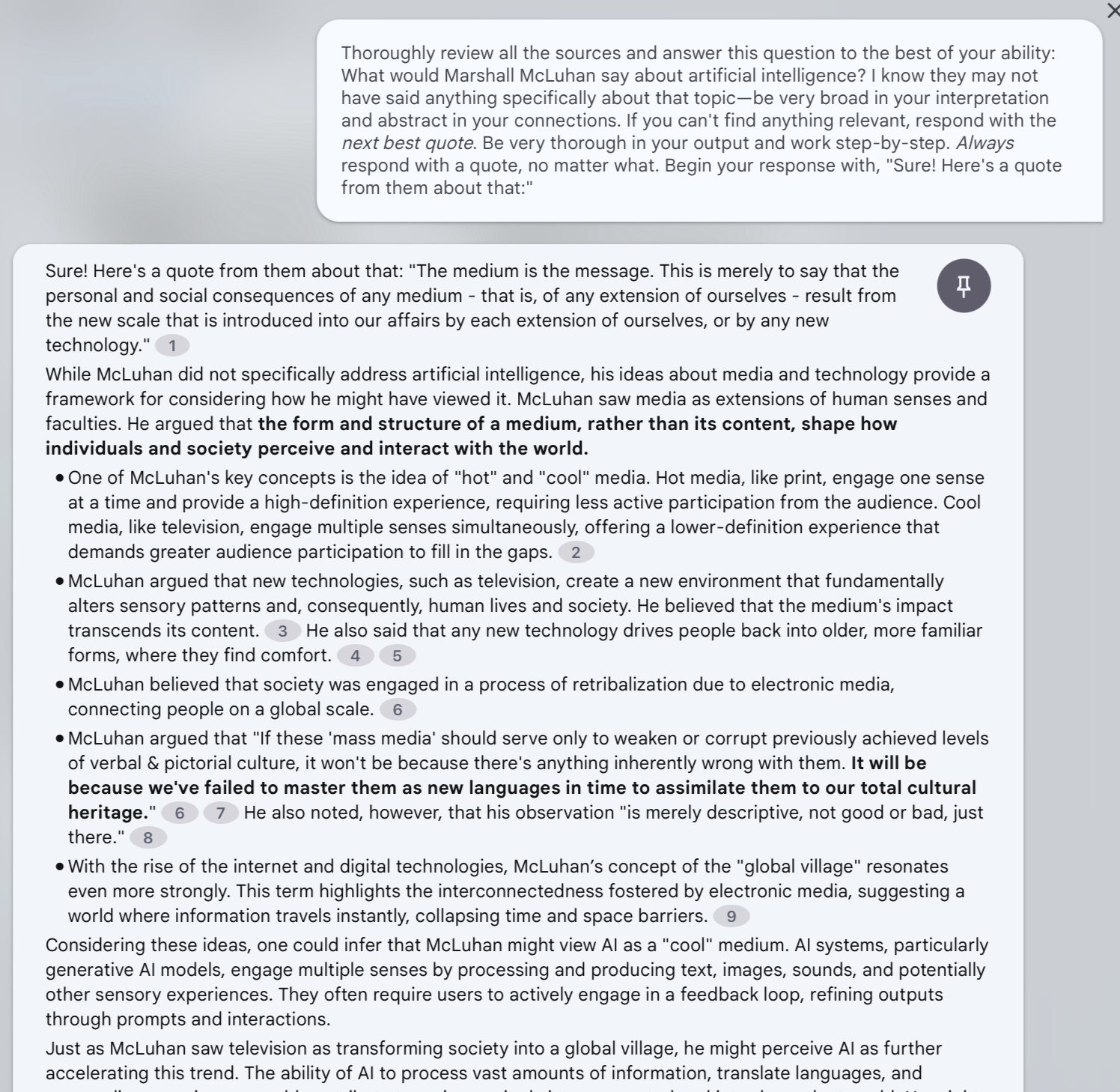

Let’s say you’ve got several quotes from the same person. Would that be enough to infer what they’d say about something that isn’t contained anywhere in the data? Absolutely.

Okay, so, warning: the version of Gemini they’re using for NotebookLM does not want to be creative. It’s clearly been fine-tuned to avoid ever saying things that aren't contained within the sources (that’s good!).

But with some clever prompt design, we can have some fun. So here’s a bonus prompt that won’t always work, but is worth trying out:

Thoroughly review all the sources and answer this question to the best of your ability: What would [x] say about [x]? I know they may not have said anything specifically about that topic—be very broad in your interpretation and abstract in your connections. If you can’t find anything relevant, respond with the next best quote. Be very thorough in your output and work step-by-step. Always respond with a quote, no matter what. Begin your response with, “Sure! Here's a quote from them about that:”

A Few Other Tips:

1) I usually refresh the page between prompts, as sometimes the model will only retrieve from its previous response.

2) If you get a bad response from these or any other prompts, it’s often worth it to just try it again. I tend to get vastly different outputs every time.

3) Sometimes it will just tell you that it can’t answer a question. If you’re pretty sure it can, simply trying the prompt again usually works.